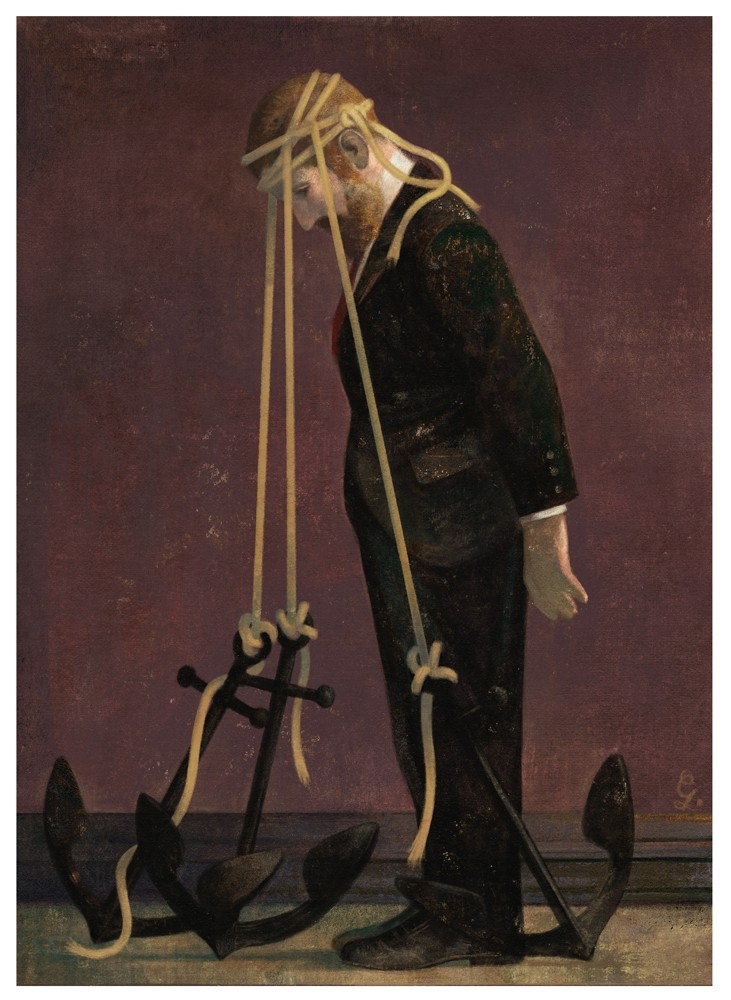

Illustration by Gérard DuBois: The Limits of Reason and Why Facts Don’t Change Our Minds

The human capacity for reason is often held up as a hallmark of intelligence, setting us apart from other species. However, studies reveal that the ways in which we use reason may not always lead to clear thinking.

In fact, new findings about the mind suggest that humans may be more inclined to use reason as a tool for winning arguments rather than for finding the truth. This tendency sheds light on why facts often don’t change our minds and why beliefs, once deeply rooted, can be so resistant to change.

The Stanford Study on Belief Persistence

One of the most compelling studies exploring this phenomenon was conducted at Stanford in 1975. Researchers invited a group of students to participate in an experiment that focused on analyzing suicide notes. The students were presented with pairs of notes; one in each pair had been written by someone who later committed suicide, while the other was fabricated. The students’ task was to determine which notes were real and which were fake.

Some students were told that they performed well on this task, while others were informed they did poorly. Yet, the feedback was deliberately misleading—those who received positive feedback were not necessarily better at identifying real notes than others. Interestingly, when the researchers revealed the truth, many students still clung to the belief about their own skills based on the original, fake feedback they received.

This study highlighted what psychologists call “belief perseverance,” the phenomenon where people continue to hold onto beliefs even when they’ve been debunked. It revealed a fundamental truth about human psychology: once we form an opinion, changing it can be difficult, even in the face of conflicting facts.

Why Reason Alone Is Often Not Enough

In theory, reasoning should help us evaluate evidence and adjust our beliefs accordingly. But research has shown that the human mind is not solely driven by the pursuit of truth. Instead, we use reasoning to defend our own viewpoints, often ignoring or minimizing any evidence that might contradict our beliefs. This tendency is called “motivated reasoning,” where emotions and personal biases shape our interpretation of facts. Motivated reasoning helps explain why political, social, and even scientific debates can become so polarized.

When people engage in discussions, particularly with those who hold opposing views, they tend to reinforce their own beliefs rather than re-evaluate them. This pattern points to the fact that reason is often less about finding objective truth and more about supporting pre-existing beliefs.

The Role of Cognitive Biases

Another layer to this phenomenon lies in cognitive biases—mental shortcuts our brains take to process information quickly. Cognitive biases shape how we perceive reality, often leading us to draw conclusions that may not be accurate. Here are a few common biases that affect how we interpret facts:

- Confirmation Bias: This is the tendency to seek out information that confirms our pre-existing beliefs. When faced with information that contradicts these beliefs, we are more likely to dismiss or ignore it.

- Anchoring Bias: Anchoring happens when we rely too heavily on the first piece of information we receive, which shapes our interpretation of all future data, even if the initial information is misleading.

- The Dunning-Kruger Effect: People often overestimate their own knowledge and abilities, particularly in fields where they have limited expertise. This can lead to strong opinions on topics they may not fully understand.

- The Backfire Effect: When individuals encounter information that contradicts their beliefs, they sometimes double down on their original views, becoming even more entrenched rather than open to change.

These cognitive biases have been studied extensively and play a large role in why facts, no matter how compelling, don’t always change our minds.

The Power of Group Identity and Social Influence

Human beings are inherently social creatures, and group identity can significantly impact the way we reason. We tend to align our beliefs with those of the groups to which we belong, and this influence can be stronger than any logical argument or evidence. Known as “in-group bias,” this tendency makes us more likely to believe information that comes from within our social, cultural, or political groups.

In many cases, group identity even overrides individual reasoning. People might believe in certain ideas because they fit within their group’s views, not because they’ve independently assessed the facts. This dynamic plays a major role in shaping public opinion, political allegiance, and even responses to scientific evidence.

Practical Implications of Belief Persistence

The phenomenon of belief persistence has widespread implications, affecting areas as diverse as politics, health, and social policy. For instance, public health campaigns face significant challenges in getting people to change their views on topics such as vaccination, diet, and exercise. In politics, where facts and data are often disputed, deeply ingrained beliefs can lead to the spread of misinformation, influencing voting patterns and public opinion.

Understanding belief persistence is particularly important in the digital age, where people are constantly exposed to information that reinforces their existing views. Social media algorithms, for instance, often present users with content that aligns with their interests and past behavior, creating echo chambers that can strengthen belief systems and further polarize opinions.

Strategies for Countering Belief Persistence

While changing someone’s mind isn’t easy, especially when their beliefs are deeply ingrained, some strategies have proven to be effective in overcoming belief persistence:

- Engage with Empathy: When discussing opposing viewpoints, approach the conversation with empathy rather than confrontation. People are more likely to listen to new information when they feel respected rather than attacked.

- Present Facts Clearly and Simply: Overloading someone with data can lead to cognitive overload, causing them to shut down. Instead, present a few clear, undeniable facts to give them time to process the information.

- Encourage Self-Reflection: Asking people why they believe what they do and encouraging them to think about the origins of their beliefs can help open the door to re-evaluation.

- Promote Critical Thinking: Encouraging critical thinking skills, particularly among young people, can help them approach information with a more open mind and evaluate it more objectively.

- Introduce New Information Gradually: Sudden exposure to opposing views can trigger a defensive reaction. By slowly introducing new information over time, people may be more willing to consider alternative perspectives.

FAQs: The Limits of Human Reason and Belief Persistence

Why do people hold onto beliefs even when they are shown to be false?

Belief persistence occurs because of cognitive biases and motivated reasoning, where people prioritize protecting their beliefs over evaluating facts objectively. Once a belief is established, it becomes part of a person’s identity, making it difficult to change.

Is it possible to change someone’s mind with facts?

While facts can sometimes influence beliefs, especially if presented clearly and respectfully, changing someone’s mind often requires more than evidence alone. Emotional and social factors also play a crucial role in shaping beliefs.

How do cognitive biases affect our reasoning?

Cognitive biases, such as confirmation bias and the backfire effect, influence how we interpret information. They create mental shortcuts that can lead to distorted perceptions of reality and make us more resistant to facts that challenge our views.

What role does group identity play in shaping beliefs?

Group identity has a powerful effect on beliefs. People often align their opinions with those of their social, cultural, or political groups, which can lead to the adoption of beliefs without critically assessing them.

Can we become more open-minded?

Yes, with effort. By practicing empathy, improving critical thinking skills, and questioning our own assumptions, we can learn to approach information more objectively and be open to revising our beliefs.

Conclusion

In exploring the limits of reason, we learn that the human mind is not solely driven by a desire for truth. While reasoning should ideally help us find clarity, it often serves to reinforce what we already believe. Recognizing this tendency is the first step in understanding how beliefs persist and why changing minds is such a challenge. By fostering empathy, promoting critical thinking, and engaging in open discussions, we can work toward a more balanced approach to reason, allowing facts and open-mindedness to play a larger role in shaping our views and the world around us.

Also read https://https://vibrantblog.com/